Radiologists are medical detectives who work behind every X-ray and MRI. The Radiologist does not run the scanner. Instead, they spend their days in a dimly lit room finding signs of disease on multiple ‘slice’ images of a patient’s body. A patient’s scan can look like an image puzzle to the Radiologist; they have to solve that many puzzles during each shift.

While there are no limitations on a highly skilled professional’s ability, she/he is also human and will eventually experience perceptual fatigue when staring at an image for hours. Perceptual fatigue can be likened to trying to find a specific person in a large group photo – you’ll eventually become tired from viewing the image. Studies have shown that perceptual fatigue is a major cause of error in the interpretation of medical images – not due to a lack of knowledge or training by the radiologist, but rather due to the high volume of information that the radiologists’ brains need to interpret.

The goal of using artificial intelligence (AI) in the future is not to replace radiologists, but to assist them with AI in their daily workflow. The idea is to provide a radiologist with a tireless partner that pre-interprets all images before they are viewed, identifying subtle changes that may have been missed by a fatigued radiologist after working hours, allowing the radiologist to focus on what is most important to the patient’s care.

AI in medical imaging: Smarter scans with AI.

Deep learning, particularly the machine learning subfield, is used to analyze medical imaging data — specifically X-ray, computed tomography (CT), magnetic resonance imaging (MRI), ultrasound, and mammogram data.

These medical image analysis systems use machine learning to identify trends and patterns in large volumes of labeled medical images for training. The model will use the “predictions” made by the system compared to the annotated findings of a physician (such as “lung nodule” or “no bleed”) to adjust the model to increase accuracy.

Once trained, the model can identify and highlight potential problems in an image, measure anatomical structures, and provide possible clinical findings.

AI technology is also being used in radiology as a “second reader”. AI can quickly sort through images to identify urgent conditions, such as a possible stroke or internal bleeding, and deliver those images to the radiologist first. In addition, AI can flag areas of an image that may contain small, difficult-to-see anomalies that the radiologist may have missed. Finally, AI can perform many routine tasks in radiology, such as segmenting organs, estimating bone age, and monitoring tumor growth over time. Using AI technology in radiology can lead to greater consistency, fewer work hours for the radiologists, and quicker turnaround times for patients. These benefits are most evident in high-volume environments.

AI doesn’t “understand” images in the way a clinician would. Rather, it finds statistical trends in images; therefore, how well an AI performs depends on image quality and on how closely the patient population (from which the images are taken) mirrors the population used to train the model. Models can also be influenced by differences across hospitals or MRI scanners, as well as by protocol variations. A high number of false positives could create additional work for clinicians to follow up on, while false negatives may harm patients due to clinicians’ reliance on the tool.

Due to this risk, many AI-based medical imaging tools undergo clinical validation studies, are monitored once deployed, and are generally deployed under human supervision. In addition to being used in conjunction with a clinician, many of these tools are classified as medical devices and therefore must meet regulatory standards for safety and performance. The best method of using AI for medical imaging applications today is through collaboration: the AI performs fast processing and identifies image trends, while the clinician provides contextual information, makes judgments, and assumes responsibility.

So, What Is “Medical Imaging AI” in Simple Terms?

When we think about AI, we often imagine robots as depicted in the movies. However, medical imaging analysis is not an artificial intelligence with the ability to think or understand what a lung is. Medical imaging analysis is simply specialized software designed to perform a single task: identifying patterns in images. While this is certainly a powerful form of software, the idea that it can understand (i.e., have a “thought”) is misleading. This type of software is best viewed as a high-powered magnification tool for identifying a specific aspect of an image.

The AI tool helps the Radiologist (the Doctor that interprets your Scan) act like an endless Assistant. A computer has a “Find” function to search for a single word in an enormous document. The AI scans an image before showing it to the radiologist, identifying subtle patterns that the human eye may have missed. Another set of eyes that doesn’t get tired, helping the radiologist focus on the most important part of the image. However, unlike Generalist AIs (like Siri), Diagnostic AIs are Specialists. For example, an AI that finds small Fractures in an X-Ray cannot find Pneumonia in a Lung Scan. Instead, each type of AI was taught to be an Expert at finding one thing by looking at Millions of Examples of images with the one thing they were trained to look for. This is why they are so successful. How do you train a Computer to look at Images and Recognize Disease?

Medical Imaging Technology: Modern MRI, CT, and X-ray tools

Medical Imaging Technologies are diagnostic imaging technologies used to create images of the inside of your body. The primary purpose of these images is for clinical professionals to identify disease, develop plans for treating the identified disease, assist in guiding procedures, and to track the progress of healing without performing surgery. The four most common imaging modalities are: X-ray, Computed Tomography (CT) or CAT scans, Magnetic Resonance Imaging (MRI), Ultrasound, and Nuclear Medicine (PET and SPECT).

An X-ray is one of the oldest and fastest forms of medical imaging. An X-ray uses low levels of ionizing radiation to create an image of dense objects such as bones, and can be used to visualize certain lung conditions. A CT scan is an advanced version of X-ray technology. Instead of creating a single slice, a CT produces multiple slices and then generates a 3D image of organs, blood vessels, and/or traumatic injuries. While CT scanning provides better detail of internal organs and areas of injury than X-rays, it requires a higher radiation dose to produce high-quality images. Therefore, clinicians seek to minimize radiation exposure while maintaining high-quality images by using dose-reducing techniques.

MRI provides images of soft tissues such as the brain, spinal cord, muscles, and joints by using a combination of strong magnetic fields and radio waves, rather than radiation. MRI sequences can be designed to examine different tissue characteristics, enabling high-quality imaging of tumors, inflammation, and stroke. In contrast, ultrasound produces images of internal structures using sound waves and is popular for its portability, cost-effectiveness, and lack of radiation. Ultrasound is commonly used during pregnancy assessments, echocardiograms, and to evaluate abdominal organs and blood flow.

Unlike traditional imaging techniques that focus on visualizing the body’s anatomy and structure, nuclear medicine images the way the body functions by utilizing a small radioactive material called a “tracer”. The tracer is introduced into the body through injection, and the nuclear medicine scanner then measures how much and where the tracer accumulates, providing valuable information about how well an organ is functioning, if there are any signs of cancer metastasis, if the heart is receiving adequate blood supply (perfusion), or if there is abnormal metabolic activity.

In addition to producing high-quality images, modern medical imaging technologies use digital storage and communication systems (PACS), standardized image formats (DICOM), and software to create three-dimensional reconstructions and measure the size of objects within those images. New and evolving trends in medical imaging technology continue to address issues related to radiation dose, scan time, device portability, and the application of artificial intelligence to assist clinicians in improving both the speed and accuracy of their diagnostic decisions.

How Do You Teach a Computer to Read a Medical Scan?

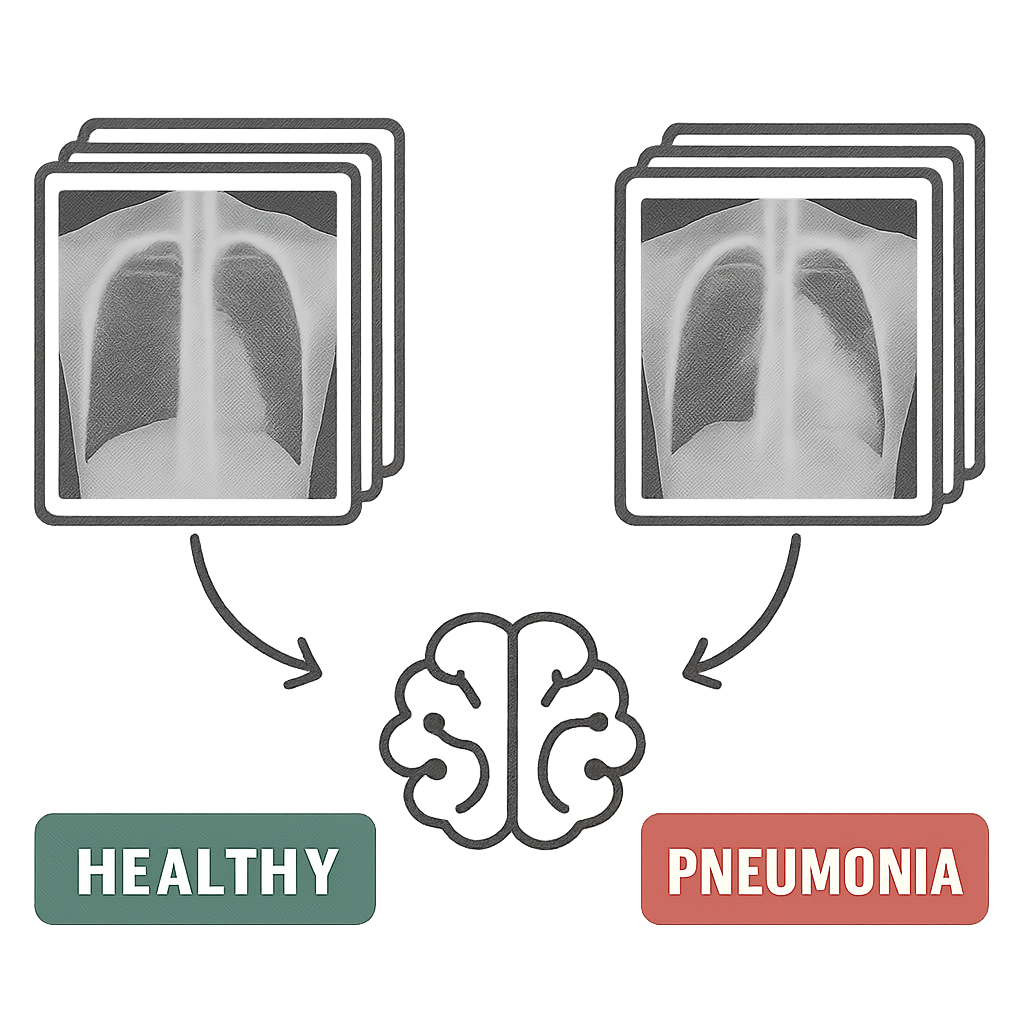

You cannot simply hand a doctor a medical textbook and expect them to be knowledgeable in medicine. Teaching an AI to look at a scan is similar to showing a student a huge set of flashcards. The AI training method, like all training methods, depends on the system being trained having access to a large library of examples. Therefore, the program is not programmed with specific rules for determining what a pneumonia image looks like, etc. The AI must determine this based on its own pattern discovery in the data.

The images used to train the AI are not random images; they have been identified as either healthy or diseased. They are also labeled by human experts. If you were to take a million chest X-rays and label them all as “pneumonia” and a million chest X-rays as “healthy,” you would have a collection of images that could be called Labeled Data. This collection of images, along with their corresponding labels, is the actual curriculum the AI will use to study.

Through analyzing millions of labeled images, the AI makes the connections between them. The AI learns by identifying the subtle features of texture, shadow, and shape that are present in all “Pneumonia” images but absent in all “Healthy” images. No single human can identify these patterns, giving the AI the opportunity to learn from far more information than any doctor could see in a lifetime.

After learning from such a large amount of data, the AI is an expert at recognizing the tell-tale visual signs of a particular disease. The AI will never “think” as a doctor does; however, it has been trained to spot the same visual cues that doctors use in diagnosing patients. Because AI can be incredibly focused, it can make many extraordinary observations, including finding a needle in a haystack or the smallest crack in an X-ray image.

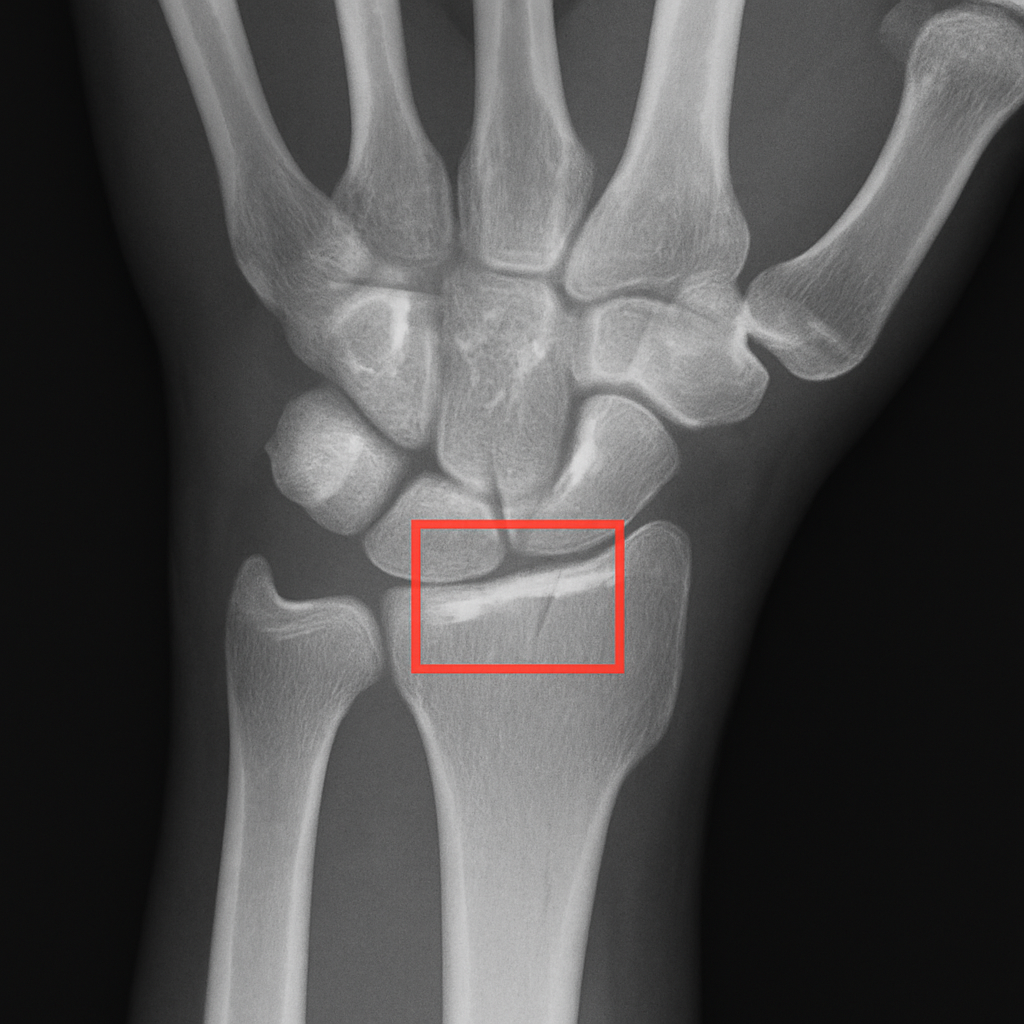

Finding the Needle in a Haystack: How AI Spots Tiny Fractures

When you take an X-ray after a fall to check for a break in your wrist (the wrist is the most common area for a bone to break) there are dozens of images to look at, and it can be difficult to find even a small crack in one of those dozens of images with the human eye. The number of images radiologists have to sort through on a daily basis often creates a backlog in the time they need to determine whether a bone has been fractured. Thus, an additional pair of extremely fast eyes will likely improve the situation.

“AI is an important part of this process because AI will be able to review the patient’s X-ray or CT scan quickly (within seconds), and based on what it finds, it will send that case to the Radiologist immediately if there are indicators of a potential fracture. In other words, the Radiologist will see the most serious cases first, which is a huge advantage in terms of helping to prevent medical imaging errors and also reducing the time before a patient receives a diagnosis.” “The ability to identify what is almost impossible to see with your own eyes is one way AI can help provide a quick and accurate diagnosis of a fractured bone. However, the ability to use that same type of pattern recognition for early cancer detection is a much larger promise. That means instead of looking for a small fracture in an X-ray, you would be looking for the first signs of disease in an X-ray.”

The Power of a Second Look: AI and Early Cancer Detection

That exact skill of noticing a teeny-tiny deviation in a pattern will become a lifesaver in early cancer detection using AI. When looking at a mammogram, for example, the first signs of breast cancer (in the form of microscopic collections of cells) are typically extremely difficult to tell apart from dense healthy tissue. As such, the radiologist must sift through complex mammogram images to identify subtle discrepancies. If they miss it, there could be a delay in the diagnosis; having a second, hyper-vigilant set of eyes is very valuable.

The remarkable ability to see what is almost completely invisible does more than provide a quick diagnosis of a fractured bone; it reveals a larger potential for the future. The same type of pattern recognition is key to using AI for early cancer detection; in essence, finding a tiny fracture becomes finding the earliest indication of disease.

The physician receives a robust visual aid to help them evaluate the mammogram, rather than simply getting an automated “yes/no” from the AI. The AI provides the physician with a “heat map” that is similar in concept to a weather forecast map overlaid on the mammogram. The heat map highlights regions the AI has identified as suspect, displaying bright red and yellow; these are the same colors used in weather forecasting maps to indicate high temperatures and storms. The heat map does not replace the physician’s professional judgment; rather, it serves as a highly trained assistant, pointing out specific locations that warrant close examination by the physician.

AI is designed to be a safety net for doctors. The use of AI has enabled a “second read,” allowing two doctors to review the same mammogram to help prevent missed readings. When compared side by side, studies have shown that doctors who work with AI assistants detect more cancers than those who do not. Machine learning can provide doctors with an immediate second opinion; that second opinion can also help identify cancers that may go unnoticed by a single human eye.

Seeing the Invisible: How AI Helps Analyze Lung and Brain Scans

A mammogram is like a photo of you, while MRI or CT scans are like a very detailed three-dimensional model of you (made from hundreds of two-dimensional images, called slices). For doctors, looking at those models means going through each slice individually; however, AI systems can analyze all the slices (and therefore the whole three-dimensional model) simultaneously — giving the AI a view of the complete model that cannot be achieved with the human eye. This type of model is particularly important for visualizing and analyzing the complexities of organs such as the brain and lungs.

In addition to identifying potential health issues, the ability to view organs in their entirety enables AI to measure the size and/or extent of the issue with greater precision than human visual estimation. For example, if a doctor is treating someone for pneumonia, he/she wants to determine the total amount of lung affected by the pneumonia. Rather than visually estimating the portion of the lung involved, AI algorithms designed to analyze CT scans of the lungs can automatically measure the exact percentage of lung area affected by pneumonia. The same capability to accurately measure organ issues is important in cancer treatment, specifically in assessing tumor growth or reduction post-treatment, where AI trained on MRI data can automatically measure tumor size changes after treatment.

Advances continue to enhance this measurement capability. For example, let’s say you have two brain scans from 6 months apart to track a disease such as Multiple Sclerosis. The AI can accurately align the two scans and highlight the smallest change in a lesion’s size or shape, which may go unnoticed when a human reviews each scan for possible differences. Advanced computer vision in both Digital Pathology and Radiology provides physicians with an additional objective source of data to base their judgment on whether a treatment is effective.

The AI brings a level of fact-based (i.e., measurable) data to the physician’s evaluation process, in addition to their own professional experience and knowledge. Instead of simply looking for a problem, the physician can now track the patient’s progress using quantifiable data.

Image Analysis AI: that Reads Images

Image Analysis AI uses a variety of computer-based techniques to automatically find meaning in images. Unlike a person manually reviewing all images, image analysis AI can identify objects, measure dimensions, detect patterns, and pinpoint important regions. Image analysis AI is used across numerous industries, including medical imaging, security, quality control in manufacturing, retail, agriculture, and remote sensing (satellite imagery).

The majority of image analysis AI today is built using deep learning, particularly CNN’s and now also vision transformers. Deep learning is based on example learning. Examples are provided during training. The model learns from these examples. Training occurs when the model sees a large number of images and their corresponding labels (e.g., “crack,” “tumor,” “cat,” “flood”), and it adjusts its internal parameters to minimize error. Once trained, the model will be able to identify objects, predict outcomes, provide confidence levels, and generate pixel-level maps for new images.

Common tasks involve:

- Classification: determining what you see in an image (normal/abnormal).

- Detection: drawing a box around a specific object (lesion).

- Segmentation: defining the exact edges of an area (organ).

- Measurement/Tracking: measuring how large something is, or how it changes with time.

- Anomaly Detection: identifying unusual image characteristics when there are no images to train from.

Good performance relies on both high-quality images and high-quality training data, compared to what one would see in real life. The data the model was trained on (e.g., viewpoint, camera type, lighting, etc.), as well as the patient population, may be significantly different from what one sees in day-to-day use, which will negatively affect the model’s accuracy. In addition, teams using these systems face a challenge related to “explainability,” as many are considered “black boxes.” To address the lack of explainability in their systems, many teams have started adding heatmap and/or attention map overlays to the system’s output to help illustrate how and why it made its decisions.

In general, the best results occur when a team uses image analysis AI in conjunction with human review of the image analysis. Using image analysis, AI can help streamline and accelerate the workflow, eliminate repetitive tasks, and ensure consistency, while the human element provides context, judgment, and accountability for the final determination.

AI in Radiology: Faster, more accurate diagnoses

AI in radiology involves using machine learning — particularly deep learning — to assist in the reading, interpretation, and management of medical imaging exams. The field of radiology generates vast amounts of data from a variety of imaging modalities, including, but not limited to, X-rays, CT scans, MRI, Ultrasound, Mammography, etc. Machine learning techniques, specifically those used in deep learning, can be employed to identify patterns in data (images) from radiologic examinations, prioritize urgent studies, and automate time-consuming manual processes.

One way many use this technology is through computer-aided detection and triage. For example, AI may be able to indicate possible intracranial hemorrhage on a head CT, large-vessel occlusion on stroke imaging, pneumothorax on a chest X-ray, or even a suspicious-looking lung nodule. When these alerting mechanisms are incorporated into the clinical workflow, they can move critical studies to the top of the reading list, enabling faster treatment of critically ill patients.

AI enhances both the quantifiable/consistent aspects of radiology and segmentation and measurement capabilities. For example, tools can automatically identify and quantify different organs or lesions; measure the volume of structures or tumors; compare tumor dimensions; calculate patients’ skeletal age; measure calcification in coronary arteries; etc. These types of measurements will reduce the time radiologists spend manually performing these functions and enable more consistent, accurate assessments when comparing images acquired at different times.

In addition, AI can also improve the quality of the imaging itself (e.g., noise reduction, fast reconstruction, and/or optimized low-dose CT protocols).

Although there are many advantages associated with using AI in radiology, it is essential to understand that AI cannot replace radiologists. There are several reasons for this. First, even when an AI model has been trained on thousands of cases, it is still a machine learning model. It may make errors based upon how it was trained and based on differences in patient populations, scanning equipment, or scanning protocols. Second, incorrect positive findings can lead to unnecessary additional work for radiologists, while incorrect negative findings can pose serious risks to patients who rely too heavily on AI output for their diagnosis. For that reason, most developers treat AI output as decision support, with radiologists ultimately responsible for providing the correct diagnosis and treatment plan.

To ensure successful adoption of AI-based radiology products, it is essential that developers conduct rigorous clinical validation and obtain regulatory approval as a Class II Medical Device. Developers should also consider integrating their product into existing picture archiving and communication systems (PACS)/radiology information systems (RIS), creating user-friendly interfaces, and developing continuous monitoring programs to prevent degradation over time.

Ultimately, the ideal relationship between AI and radiology professionals is one of collaboration rather than competition. AI provides rapid processing, enhanced pattern recognition, and increased measurement accuracy, while radiologists provide clinical judgment and oversight.

Radiology Image Analysis: Smart Analysis of X-rays and MRIs

Radiology image analysis involves reviewing radiologic images to determine whether anatomical structures are normal, abnormal, or diseased and to assist in determining the appropriate course of treatment. The interpretation of radiologic images encompasses various imaging modalities, including X-rays, computed tomography (CT), magnetic resonance imaging (MRI), ultrasonography, and mammography. The radiologist combines his/her interpretations of the images with the patient’s clinical data (lab results, symptoms, etc.) to make an overall conclusion and generate a written report based on their findings.

Typically, radiologists follow a step-by-step approach when analyzing radiologic images: First, they verify that the correct patient and study have been selected; second, they evaluate the quality of the images; third, they systematically evaluate the key areas of interest (regional assessment); fourth, they compare the new images with the previous images taken for the same patient; fifth, they use standardized terms to describe their findings. When evaluating radiologic images, radiologists look for indicators of disease such as masses, hemorrhage, fracture(s), inflammatory processes, fluid collection(s), enlarged organs, variations in tissue density or signal intensity, etc. In addition to identifying individual indicators of disease, radiologists often analyze patterns in the radiologic images, for example, the distribution of pulmonary opacities, symmetry between left and right sides, and how the tissues enhance after administration of a contrast agent.

Measurements in radiology image assessment are very valuable. Doctors may measure lesion or organ sizes, vessel narrowing, or tumor volumes. Changes over time in cancer patients may indicate their response to treatment. Critical emergency situations like stroke, pulmonary embolisms, internal hemorrhaging, and bowel obstructions require quick identification to influence patient outcomes.

PACS (Picture Archiving and Communication System) systems currently store images and provide windows for viewing, and with modern technology, clinicians can use window control features to enhance the ability to detect subtle differences between similar structures and images. Using multi-planar reconstructions and 3D imaging, clinicians can better understand complex anatomical structures. Additionally, many forms of AI-assisted software have been developed that can segment tumors, highlight areas of concern, quantify measurements, and more, all of which assist the clinician’s decision-making process.

Radiologists are also concerned with quality and safety issues, including radiation exposure from CT scans and X-rays, risks associated with contrast agents, and the potential for incidental findings. The main objective is clear communication: the radiologist will communicate what they see, what they think it might mean, how urgently it needs attention, and recommendations for follow-up or treatment.

Is AI Really More Accurate Than a Doctor?

You have most likely read that: “Artificial intelligence (AI) is better than doctors at making diagnoses.” The medical media hype over these research studies can be exciting; however, the vast majority of these studies test an AI system under very controlled conditions for just a single diagnostic task (e.g., identifying a solitary nodule). In contrast, real-world clinical practice is much more complicated. An artificial intelligence system designed to detect lung cancer may not recognize a fractured rib, nor identify signs of pneumonia on the same chest x-ray as would your physician. This is a major difference between how an AI system and a radiologist perform the same task.

Think of this another way: The AI is like a super-sniffing dog with an extremely keen sense of smell that can pick up on a single scent, sometimes stronger than what the average person can perceive. The Doctor is like the lead detective in the investigation. While the Doctor (Lead Detective) will use every single piece of evidence to build their theory (symptoms, family medical history, etc.), the AI (sniffer dog), has only been trained to sniff out a small portion of the overall picture and has pinpointed a single area, therefore; its single point of focus on a very narrow topic of interest is a great additional clue to help the Lead Detective.

As such, the greatest results do not arise from an ‘AI vs Human’ battle, but instead, through collaboration. Research clearly shows that AI improves diagnostic accuracy most effectively when used as a ‘second reader.’ In this role, the AI can identify the smallest areas that may have been overlooked by the human eye, while allowing the Doctor to provide the essential background information to make an informed final determination.

Ultimately, the objective is to give your Doctor the best possible tools to utilize so they can be the best Doctor possible.

Who Is in Charge? Your Doctor and Their “AI Co-Pilot”

It is very important for you to understand that your Doctor is always in control. A Radiologist and an Artificial Intelligence (AI) are partners, and the new relationship of the two should be viewed from the perspective of the AI being a highly advanced “co-pilot.”

Think of a modern Airline Pilot. The airplane’s autopilot system will take on many functions, such as flying the route and monitoring multiple data streams, and is likely able to spot things that a human might overlook in a matter of seconds. However, the experienced pilot has final decision-making authority regarding weather issues, including making emergency decisions and ensuring a safe landing. The AI is the airplane’s autopilot system, providing the doctor with significant support and analysis; however, the doctor remains the Captain of your medical care.

AI may be able to identify small, questionable abnormalities on an MRI (or CT scan) in the hospital environment that a time-constrained doctor might miss. In this context, the AI is presenting suggestions, not commands, for the doctor to review, use their extensive education and experience with your individualized health history, and determine whether or not you have the condition identified by the AI. Data comes from AI; wisdom comes from doctors.

How Do We Know These AI Tools Are Safe and Effective?

Fortunately, they are not released to hospitals at random. The U.S. FDA regulates this type of software as a medical device, in the same manner as a new heart monitor or insulin pump. Therefore, before assisting your physician with an AI tool, it must undergo an extensive process to demonstrate its safety and effectiveness in treating patients. End Text.

For example, in the USA: Approval Process (FDA)

The FDA approval process for AI Imaging Software is very detailed and involves multiple stages. Although this process may be different depending on the specific type of software you are using to get to the clinic, the AI will typically have to go through many testing processes before it can be used with patients:

- Extensive Testing of Past Data: The AI will first be tested on thousands of new medical images it has never seen before. This is like the final exam that proves the tool can identify what it was trained to look for without assistance.

- Clinical Validation: Next, the tool will likely be tested within a clinical or simulated clinical environment. Researchers will then test how well the AI performs compared to a doctor to confirm whether the tool truly aids in diagnosis and does not confuse the doctor.

- FDA Review: Finally, a group of FDA scientists, doctors, and engineers will thoroughly review all data collected during testing before the software is cleared for use with patients.

The above-described multi-stage process affords a reasonable amount of confidence that an “FDA-Clearance” AI has met the high standards for performance established by the FDA. This is similar to getting a seal of approval from the FDA confirming that the “co-pilot” is ready for duty.

Beyond Accuracy: How AI Makes a Doctor’s Job Better

A single hospital may generate thousands of medical images daily. Doctors will scrutinize each image in the same way they do all others, regardless of how many are normal. Here’s where the application of AI to the healthcare process really excels by enabling the AI to rapidly sort through the images (and thus) provide doctors with only those images that are immediately relevant to them (or automatically perform the measurements of common exams), similar to an administrative assistant that handles the most laborious paperwork for doctors. End Text.

AI has given doctors an incredibly powerful tool to handle their case load and the repetitive work they would normally do, by doing those repetitive works and it has helped to alleviate some of the fatigue that leads to errors in medical imaging and has given doctors the ability to be able to think clearly about the most complex and ambiguous cases that are best suited for human doctors to solve.

Doctors working smarter and less fatigued by the technology workload will provide their patients with better health care. Doctors being able to think clearly about the things that really matter (diagnostic puzzles and patient interaction) rather than just the next thing they need to do (i.e., the routine task list).

What Are the Ethical Questions We Still Need to Answer?

Developing such advanced technologies in the healthcare field will obviously raise important concerns about trust and safety. To do their jobs, AIs have to review hundreds of thousands, if not millions, of previous medical scans. Therefore, a key issue is privacy. Researchers resolve this problem by anonymizing data, removing all identifiable patient information (e.g., name, date of birth, medical record number) from the images. This ensures that the AI learns from the medical data itself, not from any individual’s private file.

Another major challenge that researchers must address is algorithmic bias. If the primary training dataset for an AI consists of data from only one specific group of individuals, the AI may be less effective when analyzing scans from patients of different ages, races, genders, etc. As with a student who studies only one textbook and struggles on the final examination, researchers are working diligently to develop AIs trained on large, diverse datasets to ensure fairness and effectiveness for all users.

Beyond the new technologies themselves, we need to ensure fair availability. Are advanced imaging diagnostic systems going to be limited to large, affluent hospitals, thus precluding smaller or rural hospitals from using them? Our ultimate goal is to provide diagnostic imaging systems that are both cost-effective and easily integrated into current practice, thereby enabling a patient in a small community to receive the same high level of diagnostic capability as one in a major city. Fortunately, these are not merely theoretical considerations. Any artificial intelligence (AI) imaging system must undergo rigorous testing and receive regulatory approval from agencies such as the U.S. Food and Drug Administration (FDA) before it can be used in patients. By directly addressing the ethical issues of AI use in diagnostics, researchers and other professionals are laying the groundwork for an improved healthcare experience for all people through the safe, effective application of the technology.

The Future Is Now: What’s Next for Medical AI?

In addition to what current AI can do to assist physicians in identifying current medical conditions, the future of diagnostic AI holds much greater promise. Future generations of this technology will be designed to identify and predict the onset of diseases or illnesses before they fully develop. Think of it as similar to a weather application that doesn’t just report that it is currently raining, but also alerts you to a potentially severe storm developing next week so you can prepare for it.

This large step from identification to prediction is made possible by the AI’s ability to see more than just a single image. The next generation of systems will look at your X-ray or MRI separately, but also will look at how the patterns in your scans relate to your other health puzzle pieces (such as lab tests, family medical history, etc.) and your genetic profile. Just as a detective uses photographs from different locations at a crime scene, combined with statements from witnesses, to create an overall picture of what happened, this overall view of your health will help the AI build a much larger, more complete, and predictive picture of your health over time.

This is creating a major shift in medicine itself. In the reactive treatment of disease, artificial intelligence will be used to provide proactive methods to keep you healthy. A future system may not use artificial intelligence to identify a cancerous tumor that has already developed in the body, but instead use your health data to predict that you are at a higher risk for developing a specific type of disease several years in advance. At that point, it will give you and your physician the opportunity to take proactive steps, such as implementing lifestyle changes or initiating earlier treatments, to turn the tide of the illness back toward wellness.

Your Empowered Role in the Future of Healthcare

What was once perceived as science fiction is now an actual clinical tool. AI will not replace your physician; it will serve as a “tireless co-pilot” that has reviewed hundreds of millions of scans, helping a knowledgeable clinician detect the almost imperceptible indicators of disease. The synergy between clinicians and AI is the pathway to understanding how AI will evolve in diagnostics.

“Armed with this new understanding, you’ll be able to approach healthcare with increased confidence. When AI is a component of the diagnosis for yourself or someone you love, it is possible to be an educated partner by asking a very powerful question: “In your work, how do tools such as AI assist you?”

This single, simple inquiry changes the nature of the conversation from one of uncertainty to that of a collaborative relationship based on mutual respect and trust.

By combining human experience with machine accuracy to improve healthcare workflows with AI, we are working toward a world where answers come more quickly, treatment can start sooner, and doctors spend less time at computers and more time on the things that matter most — caring for their patients.