Machine learning is a set of methodologies for designing AI models that enable computers to perform tasks such as mimicking human decision-making and transforming inputs into outputs, producing learned knowledge and/or decisions based on those inputs. Thus, the choice of machine learning model will directly affect the success of the specific AI application.

Overview of AI and Machine Learning Models

The three major categories of AI/ML model families – supervised, unsupervised, and reinforcement learning – are explained in this guide, including the various deep learning architectures like CNNs, RNNs (which include LSTM and GRU), and GANs; specifically, their application use cases, representative algorithms, and typical issues encountered.

The Guide focuses on the importance of high-quality data, data preprocessing, the Training/Evaluation Process, and Model Selection, which are defined by Problem Definition, Data Characteristics, Problem Complexity, and Compute Constraints. It provides a step-by-step process for Validation and Iterative Improvement in addition to Surveying Emerging Trends such as Hybrid Models, Explainable AI, and AutoML that Improve Transparency, Accessibility,F, and Performance.

The Role of Data in AI Model Performance

Data is fundamental to all artificial intelligence models and directly influences both training efficiency and predictive accuracy. As such, the quality, quantity, and type(s) of available data play a significant role in determining how well a model will perform.

Data preparation (also referred to as data pre-processing), which involves processing data before training a model – such as removing errors from the data and normalizing values to ensure consistent values across the dataset – is an essential component of the training process. Properly preparing the data through these pre-processing activities ensures the highest possible performance from the model when performing assigned functions or completing assigned tasks.

AI Model Training and Evaluation Process

Following the preparation and organization of the data for analysis, the next step is to train Artificial Intelligence (AI) models to identify patterns and take action based on them. The AI model must be trained to adjust its parameters to minimize prediction errors. Once the AI model has completed its training cycle, it will enter an evaluation phase.

In the evaluation phase, the model’s performance will be evaluated by testing it on new, previously unseen data. The importance of model evaluation is to assess whether the model can generalize from its training data and to determine whether it will perform well in real-world scenarios, not just on the data used to train it.

Choosing the Right AI Model

Selecting a suitable model for a given task is an intricate endeavor that requires a thorough understanding of multiple critical considerations. To choose the most appropriate model for your particular needs, it is imperative to clearly articulate and understand the problem you are attempting to address.

Additionally, it is crucial to define the goals and objectives you hope to attain from this effort. After identifying the issue you are addressing and establishing the objectives you hope to accomplish, you must assess the attributes of the data you have. These include dataset size, data format, and whether the dataset has any unique characteristics. Furthermore, you must determine the computing resources available to support the model’s development.

Examples of these resources include the hardware and software available to you. Selecting an inappropriate model for a task can ultimately result in suboptimal performance. Ultimately, the results you receive will likely be unsatisfactory. Not only can the selection of an inappropriate model result in inadequate performance, but it can also result in inefficient use of your resources. Inefficient resource utilization can increase your project’s time and costs. For these reasons, it is imperative to thoughtfully analyze all your options to select the best possible model to maximize your efficiency and effectiveness.

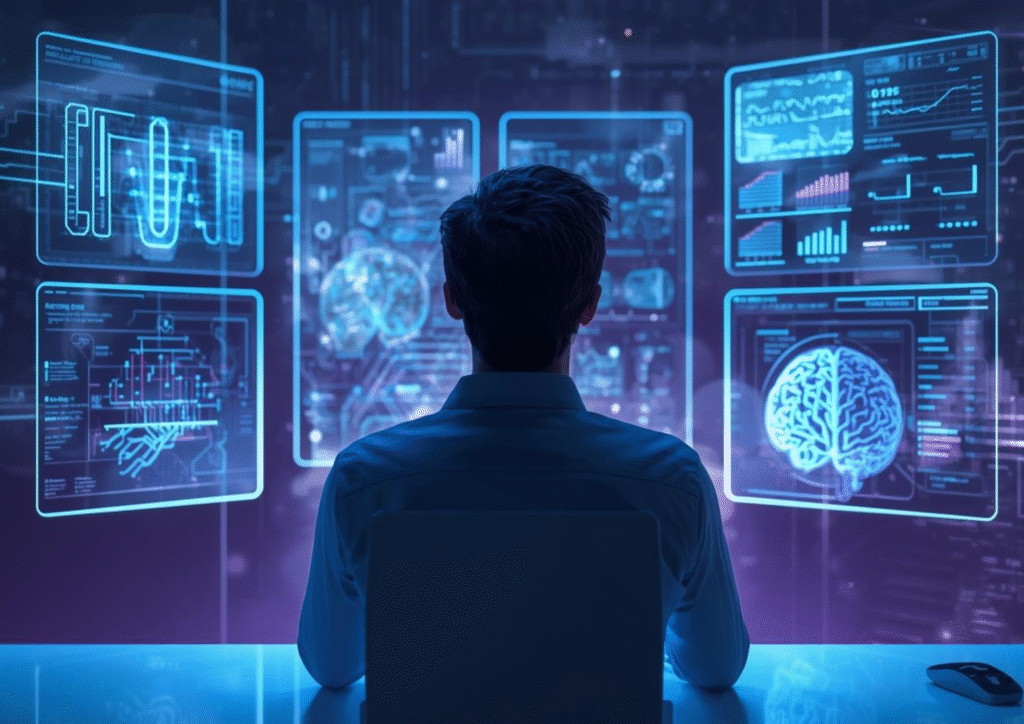

Introduction to Machine Learning Model Types

There are many terms for Machine Learning (ML) and its related fields, such as Artificial Intelligence (AI). For example, some standard abbreviations include AI, Machine Learning (ML), Deep Learning (DL), and Reinforcement Learning (RL); however, they all fall under the umbrella of AI. The main objective of machine learning is to build models that learn from diverse data to improve performance over time. In this paper, the primary focus will be on describing the most common machine learning models, including their distinguishing features.

Supervised Learning Models

Supervised learning uses labeled data, meaning each data entry has an associated output. The supervised learning model learns to map input data to their corresponding outputs. Supervised learning is evaluated by comparing the model’s predictions with the ground truth. Supervised learning is widely used in image recognition systems to classify image content and in fraud detection systems to identify anomalous transactions.

In addition, supervised learning can be invaluable when performing predictive analytics tasks, such as forecasting future trends from historical data. Some of the most common supervised learning algorithms include Linear Regression (for predicting continuous values), Logistic Regression (for predicting binary outcomes), and Support Vector Machines (SVMs) (for classification).

A significant drawback to supervised learning is that, to develop a good supervised learning algorithm, you need a lot of data that is both accurate and correctly labeled. Another potential problem is overfitting. Overfitting occurs when your model performs well on your training data but poorly on new data. This is why robust validation strategies are needed.

Unsupervised Learning Models

In contrast to supervised machine learning, unsupervised learning uses unclassified data. The goal of an unsupervised machine learning model is to identify intrinsic structure within a given dataset, or at least find some pattern that exists within it.

Customer segmentation can benefit from unsupervised learning as a way to identify a group of customers for marketing efforts, and identifying unusual patterns in your data, known as anomalies or outliers, can help you identify possible errors or fraud.

Another application of unsupervised learning is to compress large datasets into smaller ones while preserving the most valuable parts of the original data. The three most common types of unsupervised learning are K-means clustering, Hierarchical Clustering, and Principal Component Analysis (PCA), to name just a few.

One of the biggest challenges in working with unsupervised machine learning models is determining what each cluster represents. Since there are no labels to guide the interpretation of the model’s results, determining how well a model performs can be challenging.

Reinforcement Learning Models

RL trains models to act based on rewards and penalties. It is informed by behavioral psychology and is well-suited to dynamic systems. Robots can learn from their environment thanks to RL, which plays a vital role in robotics. RL is used in game AI to build intelligent agents that can learn and improve through experience.

Autonomous vehicles use RL to enhance both navigation and decision-making abilities. Key algorithms in RL include Q-learning, DQN, and PPO. Evaluating the optimal trade-off between exploring new actions and exploiting known ones is one of the most challenging problems in RL training. Achieving sufficient model training requires substantial computational resources and/or significant amounts of time.

Deep Learning Models and Architectures

Deep Learning (DL) is a subfield of Machine Learning (ML) that uses artificial neural networks with multiple layers. As such, these multi-layered neural networks enable DL systems to evaluate and interpret large volumes of complex data and to identify associations that are difficult to discern.

Therefore, DL models are most suitable for large datasets with high information volume and for high-dimensional data representing multiple attributes or characteristics. The above-mentioned tasks typically involve solving complex problems that would greatly benefit from the sophisticated capabilities of DL algorithms.

Convolutional Neural Networks (CNNs)

- The design of CNNs is based on processing data with an image-like (grid) topology. These types of networks have proven very successful at recognizing patterns in images, using convolutional layers to extract features.

- One of the most common applications of CNNs is facial recognition systems that can identify and verify individuals from images. Another significant application of CNNs is in autonomous vehicles, where they can detect other cars and road obstacles by interpreting visual information.

- In addition to these commercial applications, CNNs also help doctors diagnose various medical conditions from scans and X-rays.

- Some notable CNN architectures include VGGNet, a simple network but one that has been shown to achieve good results due to it being so deep; ResNet, a type of residual learning architecture that enables training much deeper networks than were previously possible; and Inception, a network that utilizes multiple small-sized convolutional layers in parallel to each other to improve performance.

- Training CNNs can require substantial computing resources and large datasets. As a result, there is often a problem called “overfitting” when the model fits the training data too closely. To avoid overfitting, techniques such as dropout and data augmentation may be necessary to improve the model’s generalization to new data.

Recurrent Neural Networks (RNNs)

- RNNs were designed to process sequential information; therefore, they are most suitable for analyzing time series and for NLP applications. An RNN has memory that retains the last inputs; hence, an RNN can analyze and process input sequences.

- RNNs are widely used in language modeling, where a model predicts the next word in a sentence after receiving all prior words. In addition, RNNs have been used in translation tasks, where they convert text from one language to another, and in speech recognition systems, which convert spoken words to written text.

- There exist many types of RNNs. A Long Short-Term Memory (LSTM) is a type of RNN that was developed to alleviate the vanishing gradient problem and enable the memory of long-term relationships. However, this type of RNN has two separate gates (the input gate and the forget gate), whereas the Gated Recurrent Unit (GRU) has only one gate that performs both functions.

- An RNN is challenging to train because there are many difficulties such as vanishing gradients, exploding gradients. The vanishing gradients issue is often addressed through techniques such as Gradient Clipping and the development of advanced architectures, such as LSTMs.

Generative Adversarial Networks (GANs)

- The generator network uses a single neural network to generate an output (image).

- In contrast, the discriminator network uses another single neural network to evaluate whether the generator’s output is real or fake. GANs can be used for the generation of realistic images from input noises, and for the generation of videos, as well as for generating images based on textual descriptions, commonly referred to as text-to-image synthesis.

- The DCGAN is one of the most common architectures for training GANs using convolutional neural networks and provides stability during the training process.

- The StyleGAN architecture enables the creation of higher-quality images and gives users greater control over the “style” of the photos.

- The CycleGAN architecture allows users to translate images from one class to another without creating a dataset of paired images. While GANs offer powerful image synthesis capabilities, they pose significant challenges for training due to their adversarial nature.

- In particular, the challenges include mode collapse, in which the model produces few variations, and training instability. Therefore, it is crucial to tune hyperparameters and carefully monitor model performance.

Step-by-Step Guide to AI Model Selection

The type of artificial intelligence (AI) model you use should depend upon the type of problem you want to address, the type of data you are working with, and the type of results you are seeking. To assist with making this decision, follow these guidelines:

Determine the objective(s) of your AI project. What do you want to accomplish? Are you trying to classify items, predict a value, group items into categories, or is there another objective? Once you understand what you want to accomplish, you can focus on the types of AI models that will meet your goals.

Identify the primary goals of your AI project. Are you trying to improve the accuracy of your predictions, reduce the amount of time you spend processing data, or make your application more straightforward to use? Your objectives will guide your decisions about which AI model to select.

Evaluate how complex the problem you are trying to solve is. More difficult issues may require more sophisticated models than less difficult problems. When evaluating the difficulty of a problem, consider both the type of solution needed and the resources you have available to apply toward finding that solution.

Consider the significance of the potential benefits of a successful solution. If the potential benefits of successfully addressing a problem are very significant, you may want to invest in developing an advanced model, even if it requires more effort and expense. On the other hand, if the potential benefits of a successful solution are relatively small, you may be able to develop a simple model at lower cost.

Examine your data. Is it labeled (i.e., does each item in your dataset have a corresponding “answer”)? Do you need to process the data in a particular sequence? How much data do you have, and what condition is it in? Your data will dictate whether you need to use supervised learning (when the data has labels), unsupervised learning (when the data has no labels), or reinforcement learning (when the data involves rewards).

Assess the quality and quantity of your data. A high-quality dataset containing many examples is likely to produce a model that performs well. However, when the quality or amount of your data is poor, you may need to augment your data with additional examples, clean it to remove errors, or both.

Assess the characteristics of your data, including its dimensionality, distribution, and missing values. Each of these characteristics affects the model you can select and the pre-processing steps you will need to take.

Ensure your data is readily accessible and can be easily imported into the framework of your selected model. There are several ways to store your data to make it easy to integrate with AI tools.

Please choose a model based on its complexity relative to your problem. Sometimes simpler models will work just as well as more complex ones — especially when working with smaller datasets. Try to find a balance between model complexity and performance. While more complicated models may produce better results, they also require more processing power and longer training times.

Assess the available processing power and memory. This determines whether you can train large, complex models.

Finally, assess how scalable your model needs to be. Can the model you choose scale up to accommodate growing datasets and changing conditions? A scalable model provides the flexibility to continue using your AI tool over time.

Model Testing, Validation, and Iterative Improvement

A process of iterative AI model development. Test various model iterations, assess each one’s performance, and iterate again to improve overall model performance and operational efficiency. Use rigorous testing and validation procedures for evaluating a model’s performance (e.g., use cross-validation to check the model’s robustness against overfitting).

Establish performance metric definitions for assessing your models (e.g., accuracy, precision, recall, F1 scores, etc.), depending upon the nature of the problem you are trying to solve. Promote a culture of continuous improvement by iteratively refining models to enhance performance: periodically update them with new data and retrain them to preserve performance.

Future Directions in AI Model Development

The evolving nature of artificial intelligence (AI), has likewise resulted in the evolution of the AI model(s). There have been rapid advances in computing resources, access to large datasets, and algorithms that enable the development of new AI model types. Hybrid AI models combine components from multiple AI models.

Hybrid AI models enable the leveraging of multiple AI models’ strengths while minimizing their drawbacks. As a result, hybrid AI models can provide organizations with stronger, more flexible AI. In addition to the benefits of hybrid AI models, combining them can produce strong, flexible AI models. For example, hybrid AI models may include supervised, unsupervised, and reinforcement learning.

These three forms of learning provide organizations with additional options when developing an AI model. Examples of hybrid AI models being used in the business world include personalized recommendation systems. These systems use multiple approaches to analyze user data and provide tailored recommendations. Developing hybrid AI models can be challenging due to the diversity of methodologies involved; however, the versatility they offer is substantial.

As AI systems continue to be integrated into organizations’ decision-making processes, the need for greater transparency and accountability will grow. Explainable AI (XAI) focuses on increasing the transparency of AI models so humans can better understand them. Increasing the transparency of AI models is essential to establishing trust between users and the organizations that use them, and to ensuring they are used ethically.

XAI provides insight into how the AI model makes its decisions. Some tools organizations can use to improve the explainability of their AI models include feature importance analysis, model interpretability tools, and visualization tools. With XAI, organizations can help ensure that the AI models they develop comply with regulatory and ethical standards.

Automated machine learning (AutoML) is revolutionizing model development. AutoML automates the end-to-end process of applying machine learning to real-world problems, providing significant usability for non-experts in AI. With AutoML, organizations without the necessary expertise can leverage machine learning to gain a competitive advantage.

AutoML reduces barriers to entry for AI by automating many steps in the AI development process, including data preprocessing, model selection, and hyperparameter tuning. This democratization of AI is expected to drive the widespread adoption of machine learning across industries. The future of AutoML is expected to deliver significant advances in accessibility and efficiency, ultimately driving greater innovation and AI adoption across industries.

Conclusion

Anyone interested in tapping into the potential of AI must understand the different types of AI models and how they are applied. By selecting the right model and continually improving it, you will see significant increases in project performance and innovation. Being knowledgeable about technological advancements and able to adapt to them will be imperative for capitalizing on AI. Please take advantage of what AI has to offer and let it be the driver of significant transformation in your work.

Q&A

Question: How do I decide between supervised, unsupervised, and reinforcement learning for my problem?

Short answer:

Step 1: Determine what type of data you have.

Step 2: What are your goals for using machine learning?

Step 3: Identify which type of machine learning algorithm you should use.

If you have labeled data and you know that you want to take input values and transform them into output values (classification, regression, fraud detection, image recognition, etc.), then you will want to use supervised learning with algorithms such as Linear/Logistic Regression, SVMs, etc.

If you have no labeled data and would like to understand patterns in your data (customer segmentation, anomaly detection, compression), you will want to use unsupervised learning techniques such as K-Means, Hierarchical Clustering, PCA, etc.

If you want an agent to learn how to interact with its environment based on rewards (robotics, game playing, autonomous vehicle navigation, etc.), you will want to use reinforcement learning with algorithms such as Q-Learning, Deep Q-Network (DQN), Proximal Policy Optimization (PPO), etc.

Question: When should you use CNNs, RNNs, and GANs?

Short answer: You want to match the network architecture to both the type of data you are working with and the nature of the task you are trying to solve.

CNNs work best on grid-like data, particularly images (e.g., object detection, facial recognition, medical imaging), and are typically implemented using VGGNet, ResNet, or Inception architectures.

Sequential data is a good fit for RNNs (e.g., language modeling, translation, speech recognition). It has been shown that LSTM and GRU variants outperform vanilla RNNs in many cases because they can maintain long-term dependencies in sequential data.

GANs are used for generating, translating, and transforming data (e.g., unpaired image-to-image translation, text-to-image, video/image generation), and include networks such as DCGAN, StyleGAN, and CycleGAN. CNNs and GANs generally require more training data and computational resources than RNNs. Also, RNNs suffer from vanishing gradients, which are mitigated by using LSTM/GRUs.

Question: What are the key steps for training and validating a model so it generalizes well?

Short answer: Prepare high quality data through cleaning and normalizing; Train by finding optimal values for the parameters that will result in the least amount of error; Validate how well the model generalizes by evaluating its predictions against unseen data; Use appropriate metrics for your task (e.g., accuracy, precision, recall, F1) to assess performance; Apply cross-validation techniques to make training more robust and to determine if there is evidence of overfitting; Repeat: Compare different models to each other, fine-tune the hyperparameters of each model, refine the preprocessing methods used for each model; Continue to update and retrain the model(s) with new data as they become available to maintain the overall performance of the model(s).

Question: What are some of the most common issues you will encounter when training models, and what can you do to prevent or alleviate these issues?

Shot Answer: The most common issue that occurs during supervised and deep learning training is overfitting. One way to address this problem is to perform a thorough evaluation of your model by testing on a held-out dataset and applying techniques such as regularization, dropout, and data augmentation.

Another issue that frequently occurs during Recurrent Neural Network (RNN) training is the vanishing and exploding gradient problem. To alleviate this issue, consider using Long Short-Term Memory (LSTM)/Gated Recurrent Unit (GRU) architectures, and/or employing techniques such as gradient clipping. GANs have several issues, including Mode Collapse and Unstable Training.

Carefully tune hyperparameters and closely monitor the GAN’s behavior to avoid these issues. When training models using Reinforcement Learning, you must be mindful of the need to explore the environment while also exploiting knowledge gained from past experiences (i.e., balancing exploration and exploitation).

Algorithms like Proximal Policy Optimization (PPO) can help with this. Also, keep in mind that RL training requires significant computational resources and time. Therefore, always ensure that the complexity of your trained model does not exceed the complexity of your available data and resources, as this can lead to unnecessary instability.

Question: How do hybrid models, explainable AI, and AutoML fit into a modern AI workflow?

Short Answer: Hybrid Models — Use a combination of Supervised Learning, Unsupervised Learning, and Reinforcement Learning Techniques to Leverage the Strengths of Each Technique (for example, Personalized Recommendations), while Integrating Methods Can Be Complex.

Explainable AI — Increases Transparency and Trust by Revealing How AI Models Make Decisions Using Tools Such as Feature Importance and Visualization; Often Essential for Ethical Use and Regulatory Compliance.

AutoML — Automates Data Preprocessing, Model Selection, and Hyperparameter Tuning to Streamline Development, Lower the Barrier to Entry, and Accelerate Deployment, Allowing Developers to Still Benefit From Human Oversight.